How I like to view the whole "intelligent Agent" point of view is to compare an "Intelligent agent" to a house fly, then to a mouse, then to a cat or a dog, then to a pig then on up to a child toddler, 4 year old 5,6,7,8,9,10,11 12 adult and beyond that to self evolving genius at a level beyond Einstein or beyond that.

I think if you use this comparison to some behaviors you would allow more people to understand more what you are talking about.

However, even trying to make these comparisons would be extremely difficult because Machine intelligence is very very different than human intelligence in how it arrives at a thought or conclusion. And then if you add instinct or intuition likely it could mimic both but would it be genuine or just mimicking how a human does it? So, though you might have an intelligent agent mimicking a human agent who would really be fooled in the end?

It likely would depend upon the circumstances.

For example, if you didn't know someone was pretending to be a real human but wasn't how would you actually know?

It would depend upon whether you had to communicate with the entity or not or if they stood out in a crowd in any way. So, the more drab and normal a robotic creation was dressed and the more normal it walked and acted the less likely you would guess it wasn't a human being even now!

begin quote from:

"intelligent agents"

Intelligent agent

From Wikipedia, the free encyclopedia

(Redirected from Intelligent agents)

For the term in intelligent design, see intelligent designer.

| This article needs attention from an expert in Robotics. (October 2009) |

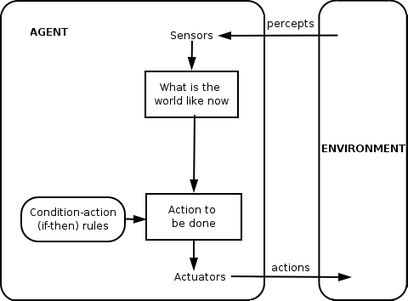

Simple reflex agent

Intelligent agents in artificial intelligence are closely related to agents in economics, and versions of the intelligent agent paradigm are studied in cognitive science, ethics, the philosophy of practical reason, as well as in many interdisciplinary socio-cognitive modeling and computer social simulations.

Intelligent agents are also closely related to software agents (an autonomous computer program that carries out tasks on behalf of users). In computer science, the term intelligent agent may be used to refer to a software agent that has some intelligence, regardless if it is not a rational agent by Russell and Norvig's definition. For example, autonomous programs used for operator assistance or data mining (sometimes referred to as bots) are also called "intelligent agents".

Contents

A variety of definitions

Intelligent agents have been defined many different ways.[3] According to Nikola Kasabov[4] AI systems should exhibit the following characteristics:- Accommodate new problem solving rules incrementally

- Adapt online and in real time

- Are able to analyze itself in terms of behavior, error and success.

- Learn and improve through interaction with the environment (embodiment)

- Learn quickly from large amounts of data

- Have memory-based exemplar storage and retrieval capacities

- Have parameters to represent short and long term memory, age, forgetting, etc.

Structure of agents

A simple agent program can be defined mathematically as an agent function[5] which maps every possible percepts sequence to a possible action the agent can perform or to a coefficient, feedback element, function or constant that affects eventual actions:The program agent, instead, maps every possible percept to an action.

We use the term percept to refer to the agent's perceptional inputs at any given instant. In the following figures an agent is anything that can be viewed as perceiving its environment through sensors and acting upon that environment through actuators.

Architectures

Weiss (2013) said we should consider four classes of agents:- Logic-based agents – in which the decision about what action to perform is made via logical deduction;

- Reactive agents – in which decision making is implemented in some form of direct mapping from situation to action;

- Belief-desire-intention agents – in which decision making depends upon the manipulation of data structures representing the beliefs, desires, and intentions of the agent; and finally,

- Layered architectures – in which decision making is realized via various software layers, each of which is more or less explicitly reasoning about the environment at different levels of abstraction.

Classes

Simple reflex agent

Model-based reflex agent

Model-based, goal-based agent

Model-based, utility-based agent

A general learning agent

- simple reflex agents

- model-based reflex agents

- goal-based agents

- utility-based agents

- learning agents

Simple reflex agents

Simple reflex agents act only on the basis of the current percept, ignoring the rest of the percept history. The agent function is based on the condition-action rule: if condition then action.This agent function only succeeds when the environment is fully observable. Some reflex agents can also contain information on their current state which allows them to disregard conditions whose actuators are already triggered.

Infinite loops are often unavoidable for simple reflex agents operating in partially observable environments. Note: If the agent can randomize its actions, it may be possible to escape from infinite loops.

Model-based reflex agents

A model-based agent can handle partially observable environments. Its current state is stored inside the agent maintaining some kind of structure which describes the part of the world which cannot be seen. This knowledge about "how the world works" is called a model of the world, hence the name "model-based agent".A model-based reflex agent should maintain some sort of internal model that depends on the percept history and thereby reflects at least some of the unobserved aspects of the current state. Percept history and impact of action on the environment can be determined by using internal model. It then chooses an action in the same way as reflex agent.

Goal-based agents

Goal-based agents further expand on the capabilities of the model-based agents, by using "goal" information. Goal information describes situations that are desirable. This allows the agent a way to choose among multiple possibilities, selecting the one which reaches a goal state. Search and planning are the subfields of artificial intelligence devoted to finding action sequences that achieve the agent's goals.- it is more flexible because the knowledge that supports its decisions is represented explicitly and can be modified.

Utility-based agents

Goal-based agents only distinguish between goal states and non-goal states. It is possible to define a measure of how desirable a particular state is. This measure can be obtained through the use of a utility function which maps a state to a measure of the utility of the state. A more general performance measure should allow a comparison of different world states according to exactly how happy they would make the agent. The term utility can be used to describe how "happy" the agent is.A rational utility-based agent chooses the action that maximizes the expected utility of the action outcomes - that is, what the agent expects to derive, on average, given the probabilities and utilities of each outcome. A utility-based agent has to model and keep track of its environment, tasks that have involved a great deal of research on perception, representation, reasoning, and learning.

Learning agents

Learning has the advantage that it allows the agents to initially operate in unknown environments and to become more competent than its initial knowledge alone might allow. The most important distinction is between the "learning element", which is responsible for making improvements, and the "performance element", which is responsible for selecting external actions.The learning element uses feedback from the "critic" on how the agent is doing and determines how the performance element should be modified to do better in the future. The performance element is what we have previously considered to be the entire agent: it takes in percepts and decides on actions.

The last component of the learning agent is the "problem generator". It is responsible for suggesting actions that will lead to new and informative experiences.

Other classes of intelligent agents

According to other sources[who?], some of the sub-agents not already mentioned in this treatment may be a part of an Intelligent Agent or a complete Intelligent Agent. They are:- Decision Agents (that are geared to decision making);

- Input Agents (that process and make sense of sensor inputs – e.g. neural network based agents);

- Processing Agents (that solve a problem like speech recognition);

- Spatial Agents (that relate to the physical real-world);

- World Agents (that incorporate a combination of all the other classes of agents to allow autonomous behaviors).

- Believable agents - An agent exhibiting a personality via the use of an artificial character (the agent is embedded) for the interaction.

- Physical Agents - A physical agent is an entity which percepts through sensors and acts through actuators.

- Temporal Agents - A temporal agent may use time based stored information to offer instructions or data acts to a computer program or human being and takes program inputs percepts to adjust its next behaviors.

Hierarchies of agents

Main article: Multi-agent system

To actively perform their functions,

Intelligent Agents today are normally gathered in a hierarchical

structure containing many “sub-agents”. Intelligent sub-agents process

and perform lower level functions. Taken together, the intelligent agent

and sub-agents create a complete system that can accomplish difficult

tasks or goals with behaviors and responses that display a form of

intelligence.Applications

An example of an automated online assistant providing automated customer service on a webpage.

See also

- Software agent

- Cognitive architectures

- Cognitive radio – a practical field for implementation

- Cybernetics, Computer science

- Data mining agent

- Embodied agent

- Federated search – the ability for agents to search heterogeneous data sources using a single vocabulary

- Fuzzy agents – IA implemented with adaptive fuzzy logic

- GOAL agent programming language

- Intelligence

- Intelligent system

- JACK Intelligent Agents

- Multi-agent system and multiple-agent system – multiple interactive agents

- PEAS classification of an agent's environment

- Reinforcement learning

- Semantic Web – making data on the Web available for automated processing by agents

- Simulated reality

- Social simulation

Notes

References

- Russell, Stuart J.; Norvig, Peter (2003), Artificial Intelligence: A Modern Approach (2nd ed.), Upper Saddle River, New Jersey: Prentice Hall, ISBN 0-13-790395-2, chpt. 2

- Stan Franklin and Art Graesser (1996); Is it an Agent, or just a Program?: A Taxonomy for Autonomous Agents; Proceedings of the Third International Workshop on Agent Theories, Architectures, and Languages, Springer-Verlag, 1996

- N. Kasabov, Introduction: Hybrid intelligent adaptive systems. International Journal of Intelligent Systems, Vol.6, (1998) 453–454.

- Weiss, G. (2013). Multiagent systems (2nd ed.). Cambridge, MA: The MIT Press.

No comments:

Post a Comment