I am also interested in all this in relation to "Enhanced Dictation which I'm learning to interact with as well.

begin quote from:

(artificial neurons), loosely analogous to the observed behavior of a biological brain's axons

Artificial neuron

From Wikipedia, the free encyclopedia

The artificial neuron transfer function should not be confused with a linear system's transfer function.

Contents

Basic structure

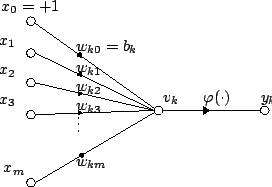

For a given artificial neuron, let there be m + 1 inputs with signals x0 through xm and weights w0 through wm. Usually, the x0 input is assigned the value +1, which makes it a bias input with wk0 = bk. This leaves only m actual inputs to the neuron: from x1 to xm.The output of the kth neuron is:

(phi) is the transfer function.

(phi) is the transfer function.

The output is analogous to the axon of a biological neuron, and its value propagates to the input of the next layer, through a synapse. It may also exit the system, possibly as part of an output vector.

It has no learning process as such. Its transfer function weights are calculated and threshold value are predetermined.

Comparison to biological neurons

Artificial neurons are designed to mimic aspects of their biological counterparts.- Dendrites – In a biological neuron, the dendrites act as the input vector. These dendrites allow the cell to receive signals from a large (>1000) number of neighboring neurons. As in the above mathematical treatment, each dendrite is able to perform "multiplication" by that dendrite's "weight value." The multiplication is accomplished by increasing or decreasing the ratio of synaptic neurotransmitters to signal chemicals introduced into the dendrite in response to the synaptic neurotransmitter. A negative multiplication effect can be achieved by transmitting signal inhibitors (i.e. oppositely charged ions) along the dendrite in response to the reception of synaptic neurotransmitters.

- Soma – In a biological neuron, the soma acts as the summation function, seen in the above mathematical description. As positive and negative signals (exciting and inhibiting, respectively) arrive in the soma from the dendrites, the positive and negative ions are effectively added in summation, by simple virtue of being mixed together in the solution inside the cell's body.

- Axon – The axon gets its signal from the summation behavior which occurs inside the soma. The opening to the axon essentially samples the electrical potential of the solution inside the soma. Once the soma reaches a certain potential, the axon will transmit an all-in signal pulse down its length. In this regard, the axon behaves as the ability for us to connect our artificial neuron to other artificial neurons.

History

The first artificial neuron was the Threshold Logic Unit (TLU), or Linear Threshold Unit,[2] first proposed by Warren McCulloch and Walter Pitts in 1943. The model was specifically targeted as a computational model of the "nerve net" in the brain.[3] As a transfer function, it employed a threshold, equivalent to using the Heaviside step function. Initially, only a simple model was considered, with binary inputs and outputs, some restrictions on the possible weights, and a more flexible threshold value. Since the beginning it was already noticed that any boolean function could be implemented by networks of such devices, what is easily seen from the fact that one can implement the AND and OR functions, and use them in the disjunctive or the conjunctive normal form. Researchers also soon realized that cyclic networks, with feedbacks through neurons, could define dynamical systems with memory, but most of the research concentrated (and still does) on strictly feed-forward networks because of the smaller difficulty they present.One important and pioneering artificial neural network that used the linear threshold function was the perceptron, developed by Frank Rosenblatt. This model already considered more flexible weight values in the neurons, and was used in machines with adaptive capabilities. The representation of the threshold values as a bias term was introduced by Bernard Widrow in 1960 – see ADALINE.

In the late 1980s, when research on neural networks regained strength, neurons with more continuous shapes started to be considered. The possibility of differentiating the activation function allows the direct use of the gradient descent and other optimization algorithms for the adjustment of the weights. Neural networks also started to be used as a general function approximation model. The best known training algorithm called backpropagation has been rediscovered several times but its first development goes back to the work of Paul Werbos.[4][5]

Types of transfer functions

The transfer function of a neuron is chosen to have a number of properties which either enhance or simplify the network containing the neuron. Crucially, for instance, any multilayer perceptron using a linear transfer function has an equivalent single-layer network[citation needed]; a non-linear function is therefore necessary to gain the advantages of a multi-layer network.Below, u refers in all cases to the weighted sum of all the inputs to the neuron, i.e. for n inputs,

Step function

The output y of this transfer function is binary, depending on whether the input meets a specified threshold, θ. The "signal" is sent, i.e. the output is set to one, if the activation meets the threshold.Linear combination

In this case, the output unit is simply the weighted sum of its inputs plus a bias term. A number of such linear neurons perform a linear transformation of the input vector. This is usually more useful in the first layers of a network. A number of analysis tools exist based on linear models, such as harmonic analysis, and they can all be used in neural networks with this linear neuron. The bias term allows us to make affine transformations to the data.See: Linear transformation, Harmonic analysis, Linear filter, Wavelet, Principal component analysis, Independent component analysis, Deconvolution.

Sigmoid

See also: Sigmoid function

A fairly simple non-linear function, the sigmoid function

such as the logistic function also has an easily calculated derivative,

which can be important when calculating the weight updates in the

network. It thus makes the network more easily manipulable

mathematically, and was attractive to early computer scientists who

needed to minimize the computational load of their simulations. It was

previously commonly seen in multilayer perceptrons. However, recent work has shown sigmoid neurons to be less effective than rectified linear neurons. The reason is that the gradients computed by the backpropagation

algorithm tend to diminish towards zero as activations propagate

through layers of sigmoidal neurons, making it difficult to optimize

neural networks using multiple layers of sigmoidal neurons.Pseudocode algorithm

The following is a simple pseudocode implementation of a single TLU which takes boolean inputs (true or false), and returns a single boolean output when activated. An object-oriented model is used. No method of training is defined, since several exist. If a purely functional model were used, the class TLU below would be replaced with a function TLU with input parameters threshold, weights, and inputs that returned a boolean value. class TLU defined as:

data member threshold : number

data member weights : list of numbers of size X

function member fire( inputs : list of booleans of size X ) : boolean defined as:

variable T : number

T ← 0

for each i in 1 to X :

if inputs(i) is true :

T ← T + weights(i)

end if

end for each

if T > threshold :

return true

else:

return false

end if

end function

end class

Limitations

Simple artificial neurons, such as the McCulloch–Pitts model, are sometimes described as "caricature models", since they are intended to reflect one or more neurophysiological observations, but without regard to realism.[6]Comparison with biological neuron coding

Research has shown that unary coding is used in the neural circuits responsible for birdsong production.[7][8] The use of unary in biological networks is presumably due to the inherent simplicity of the coding. Another contributing factor could be that unary coding provides a certain degree of error correction.[9]See also

References

- Potluri, Pushpa Sree (26 November 2014). "Error Correction Capacity of Unary Coding". Retrieved 10 March 2017 – via arXiv.org.

Further reading

- McCulloch, W. and Pitts, W. (1943). A logical calculus of the ideas immanent in nervous activity. Bulletin of Mathematical Biophysics, 5:115–133. [1]

- A.S. Samardak, A. Nogaret, N. B. Janson, A. G. Balanov, I. Farrer and D. A. Ritchie. "Noise-Controlled Signal Transmission in a Multithread Semiconductor Neuron" // Phys.Rev.Lett. 102 (2009) 226802, [2][permanent dead link]

External links

- Artifical [sic] neuron mimicks function of human cells

- [3] A good general overview

No comments:

Post a Comment