begin quote from:

https://www.cnn.com/business/live-news/supreme-court-gonzalez-v-google-2-21-23/h_50eb18fc07a1521c16960ef0501bbb9b

Supreme Court hears Gonzalez v. Google case against Big Tech

By Brian Fung and Tierney Sneed, CNN

Supreme Court justices are worried about a wave of lawsuits and disruption to the internet

From CNN's Brian Fung

A big concern of justices seems to be what happens if the court rules against Google, namely a wave of lawsuits.

Justice Brett Kavanaugh asked Eric Schnapper, representing the plantiffs, to respond to various friend-of-the-court briefs warning of widespread disruption.

"We have a lot of amicus briefs that we have to take seriously that say this is going to cause" significant disruption, Kavanaugh said.

Schnapper argued that a ruling for Gonzalez would not have far-reaching effects because even if websites could face new liability as a result of the ruling, most suits would likely be thrown out anyway.

"The implications are limited," Schnapper argued, "because the kinds of circumstance in which a recommendation would be actionable are limited."

Many of the briefs Kavanaugh referenced had expressed fears of a deluge of litigation that could overwhelm startups and small businesses, whether or not they held any merit.

Later, Justice Elena Kagan warned that narrowing Section 230 could lead to a wave of lawsuits, even if many of them would eventually be thrown out, in a line of questioning with US Deputy Solicitor General Malcolm Stewart.

"You are creating a world of lawsuits," Kagan said. "Really, anytime you have content, you also have these presentational and prioritization choices that can be subject to suit."

Even as Stewart suggested many such lawsuits might not ultimately lead to anything, Justices Kavanaugh and Roberts appeared to take issue with the potential rise in lawsuits in the first place.

"Lawsuits will be nonstop," Kavanaugh said.

Chief Justice John Roberts mused that under a narrowed version of Section 230, terrorism-related cases might only be a small share of a much wider range of future lawsuits against websites alleging antitrust violations, discrimination, defamation and infliction of emotional distress, just to name a few.

"I wouldn't necessarily agree with 'there would be lots of lawsuits' simply because there are a lot of things to sue about, but they would not be suits that have much likelihood of prevailing, especially if the court makes clear that even after there's a recommendation, the website still can't be treated as the publisher or speaker of the underlying third party," Stewart said.

Section 230 has been mentioned a lot today. Here are key things to know about the law

From CNN's Brian Fung

Congress, the White House and now the US Supreme Court are all focusing their attention on a federal law that’s long served as a legal shield for online platforms.

The Supreme Court is hearing oral arguments on two pivotal cases this week dealing with online speech and content moderation. Central to the arguments is Section 230, a federal law that’s been roundly criticized by both Republicans and Democrats for different reasons but that tech companies and digital rights groups have defended as vital to a functioning internet. In today's oral arguments on Gonzalez v. Google, the law has already come up multiple times.

Tech companies involved in the litigation have cited the 27-year-old statute as part of an argument for why they shouldn’t have to face lawsuits alleging they gave knowing, substantial assistance to terrorist acts by hosting or algorithmically recommending terrorist content.

Here are key things to know about the law:

- Passed in 1996 during the early days of the World Wide Web, Section 230 of the Communications Decency Act was meant to nurture startups and entrepreneurs. The legislation’s text recognized the internet was in its infancy and risked being choked out of existence if website owners could be sued for things other people posted.

- Under Section 230, websites enjoy immunity for moderating content in the ways they see fit — not according to others’ preferences — although the federal government can still sue platforms for violating criminal or intellectual property laws.

- Contrary to what some politicians have claimed, Section 230’s protections do not hinge on a platform being politically or ideologically neutral. The law also does not require that a website be classified as a publisher in order to “qualify” for liability protection. Apart from meeting the definition of an “interactive computer service,” websites need not do anything to gain Section 230’s benefits – they apply automatically.

- The law’s central provision holds that websites (and their users) cannot be treated legally as the publishers or speakers of other people’s content. In plain English, that means any legal responsibility attached to publishing a given piece of content ends with the person or entity that created it, not the platforms on which the content is shared or the users who re-share it.

- The seemingly simple language of Section 230 belies its sweeping impact. Courts have repeatedly accepted Section 230 as a defense against claims of defamation, negligence and other allegations. In the past, it’s protected AOL, Craigslist, Google and Yahoo, building up a body of law so broad and influential as to be considered a pillar of today’s internet. In recent years, however, critics of Section 230 have increasingly questioned the law’s scope and proposed restrictions on the circumstances in which websites may invoke the legal shield.

Read more about Section 230 here.

Plaintiff's attorney says that internet users should be held liable for retweeting or sharing

From CNN's Brian Fung

Under questioning by Justice Amy Coney Barrett, attorney Eric Schnapper, representing the plaintiffs in Gonzalez v. Google, confirmed that under the legal theory he is advancing, Section 230 would not protect individual internet users retweeting, sharing or liking other people's content.

The text of Section 230 explicitly immunizes "users" from liability for the content posted by third parties, not just social media platforms.

Barrett asked Schnapper whether giving a "thumbs-up" to another user's posts, or whether taking actions including "like, retweet, or say 'check this out,'" means that she has "created new content" and thus lost Section 230's protections.

After quibbling briefly with Barrett over the definition of a user, Schnapper acknowledged that the act of liking or retweeting is an act of content creation that should expose the person liking or retweeting to potential liability.

"On your theory, I’m not protected by 230?" Barrett asked.

"That's content you've created," Schnapper replied.

DOJ is now up for questioning

From CNN's Tierney Sneed

Malcolm Stewart, the US deputy solicitor general, is now up for arguments.

The Justice Department is arguing that Section 230's protections for platforms should be read more broadly than what that the plaintiffs are arguing, but that the algorithms that platforms use to make recommendations could potentially open up tech companies for liability.

Amy Coney Barrett points to the exit ramp that the court has to dodge big question about Section 230

From CNN's Tierney Sneed

Justice Amy Coney Barrett referenced the exit ramp the Supreme Court has that would allow it to avoid the big legal question over the scope of Section 230, the relevant law the gives internet platforms certain protections from legal liability.

She pointed to the tech case the court will hear Wednesday, in which the justices will consider whether an anti-terrorism law covers internet platforms for their failure to adequate remove terrorism-related conduct. The same law is being used by the plaintiffs to sue Google in Tuesday's case.

"So if you lose tomorrow, do we even have to reach the section 230 question here? Would you concede that you would lose on that ground here?," Justice Barrett asked Eric Schnapper, the attorney for those who have sued Google.

Justice Elena Kagan: The Supreme Court is not the "nine greatest experts on the internet"

From CNN's Tierney Sneed and Brian Fung

Justice Elena Kagan hinted that she thought that even if the arguments against Google had merits as a policy matter, Congress — rather than the Supreme Court — should be the one that steps in.

"I could imagine a world where you’re right, that none of this stuff gets protection. And you know — every other industry has to internalize the costs of its conduct. Why is it that the tech industry gets a pass? A little bit unclear," Kagan said.

On the other hand -- we’re a court. We really don’t know about these sorts of things. These are not, like, the nine greatest experts on the internet.

Following laughter in the courtroom, Kagan said she didn't have to accept "the sky is falling" arguments from Google's lawyers to think this was difficult and uncertain waters for the judicial branch to be wading in.

Maybe Congress wants a system that Google isn't so broadly protected, she offered: "But isn't that something for Congress to do? Not the court?"

Justices are skeptical of attorney’s argument that YouTube should be held liable for ISIS content

From CNN's Tierney Sneed

Those who sued Google do not appear to be getting a lot of sympathy from the justices.

Across ideological lines, the justices seemed to struggle with even the basics of the arguments the plaintiffs are trying make.

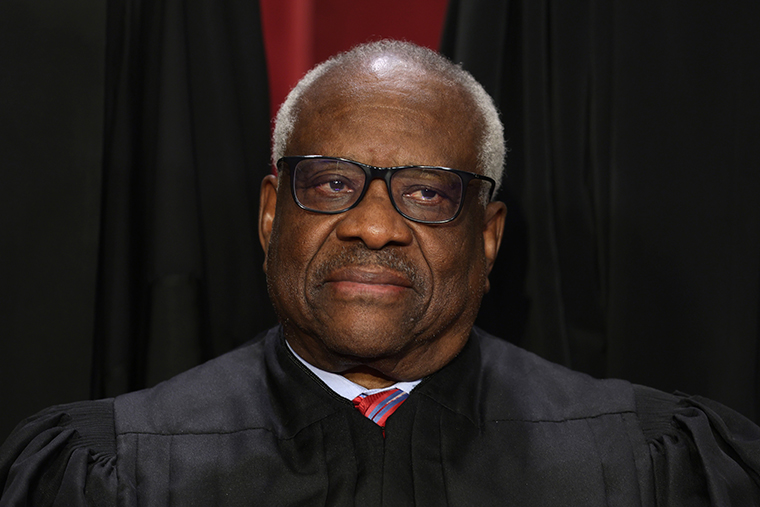

Questioning attorney Eric Schnapper first, Justice Clarence Thomas zeroed in on the fact that the algorithm that the plaintiffs are targeting in their case operates in the same way for ISIS videos as it does for cooking videos.

“I think you're going to have to explain more clearly, if it's neutral in that way, how your claim is set apart from that,” Thomas said.

Later on in the argument, Thomas grilled Schnapper on how a neutral algorithm could amount to aiding and abetting under the relevant anti-terrorism law. He equated it to calling information, asking for Abu Dhabi's phone number, and getting it from them.

"I don't see how that's aiding and abetting," he said.

Liberal justices seemed just as wary of the idea that the algorithm could really make a platform liable for aiding and abetting terrorism.

“I guess the question is how you get yourself from a neutral algorithm to an aiding and abetting – an intent, knowledge,” said Justice Sonia Sotomayor. “There has to be some intent to aid and abet. You have to knowledge that you’re doing this.”

Plaintiffs' lawyer seemingly reluctant to admit complaint could have sweeping consequences for the internet

From CNN's Brian Fung

Eric Schnapper, the attorney for those who have sued Google, seems to be having difficulty articulating what aspect of YouTube's business should be exposed to liability.

Across numerous questions, Chief Justice John Roberts and Justices Clarence Thomas and Elena Kagan, among others, have expressed confusion about how they can prevent a Supreme Court ruling from unintentionally harming content recommendations related to innocuous content, such as rice pilaf recipes. Schnapper appears reluctant to acknowledge that a ruling in his favor could have wide-ranging implications for content beyond videos posted by ISIS.

"I'm trying to get you to explain to us how something that is standard on YouTube for virtually anything you have an interest in, suddenly amounts to aiding and abetting [terrorism] because you're [viewing] in the ISIS category," Thomas said.

Justice Samuel Alito put it more bluntly: "I admit I'm completely confused by whatever argument you're making at the present time."

Clarence Thomas jumps in with first question about what parts of YouTube's algorithm could make it liable

From CNN's Tierney Sneed

Justice Clarence Thomas jumps in with the first question of the Gonzalez v. Google hearing, asking Eric Schnapper, the attorney for those who have sued Google, about whether there was something specific about YouTube's algorithm for the pro-ISIS videos in question that make the platform liable in his clients' lawsuit.

"So we're clear about what ... your claim is: Are you saying that YouTube's application of its algorithm is particular to, in this case — that they're using a different algorithm to the one that, say, they're using for cooking videos? Or are they using the same algorithm across the board?"

Thomas, who at one time had a reputation for rarely speaking at oral arguments, was expected to be highly engaged in this case.

While most justices have not previously weighed in on the particular legal issue in this case, Thomas has signaled that he thinks that the statutory provision in question was being interpreted too broadly by lower courts.

NOW: SCOTUS hears oral arguments in Google case with potential to upend the internet

From CNN's Brian Fung

The Supreme Court is hearing oral arguments in the first of two cases this week with the potential to reshape how online platforms handle speech and content moderation.

The oral arguments on Tuesday are for a case known as Gonzalez v. Google, which zeroes in on whether the tech giant can be sued because of its subsidiary YouTube’s algorithmic promotion of terrorist videos on its platform.

According to the plaintiffs in the case — the family of Nohemi Gonzalez, who was killed in a 2015 ISIS attack in Paris — YouTube’s targeted recommendations violated a US antiterrorism law by helping to radicalize viewers and promote ISIS’s worldview.

The allegation seeks to carve out content recommendations so that they do not receive protections under Section 230, a federal law that has for decades largely protected websites from lawsuits over user-generated content. If successful, it could expose tech platforms to an array of new lawsuits and may reshape how social media companies run their services.

What tech companies are saying: Google and other tech companies have said that exempting targeted recommendations from Section 230 immunity would increase the legal risks associated with ranking, sorting and curating online content, a basic feature of the modern internet. Google has claimed that in such a scenario, websites would seek to play it safe by either removing far more content than is necessary, or by giving up on content moderation altogether and allowing even more harmful material on their platforms.

Who's arguing today's case

From CNN's Brian Fung

As we wait for oral arguments to begin, here's what we know about how the day will unfold.

Eric Schnapper, an attorney representing the Gonzalez family, will kick things off. Schnapper will have 20 minutes to speak, although realistically the argument could run longer.

Next, Malcolm Stewart will speak on behalf of the US government, which has argued that Section 230 should not protect a website's targeted recommendations of content. Stewart is budgeted for 15 minutes.

Google's attorney Lisa Blatt will follow for about 35 minutes, and then Schnapper will have a chance to offer concluding remarks — though, again, everything today could run for much longer than anticipated.

Gorsuch is ill and will participate in today's oral arguments remotely

From CNN's Ariane de Vogue

Justice Neil Gorsuch is under the weather and will participate in oral arguments in Gonzalez v. Google remotely, according to the Supreme Court.

The court did not specify the type of illness.

During the Covid-19 pandemic, oral arguments were conducted remotely.

Your guide to today's Supreme Court arguments on social media moderation — and what is at stake

From CNN's Brian Fung

The Supreme Court is set to hear back-to-back oral arguments Tuesday and Wednesday on two cases that could significantly reshape online speech and content moderation.

The outcome of the oral arguments could determine whether tech platforms and social media companies can be sued for recommending content to their users or for supporting acts of international terrorism by hosting terrorist content. It marks the SCOTUS' first-ever review of a hot-button federal law that largely protects websites from lawsuits over user-generated content.

First up Tuesday is the Gonzalez v. Google case. At the heart of the legal battle is Section 230 of the Communications Decency Act, a nearly 30-year-old federal law that courts have repeatedly said provide broad protections to tech platforms but has since come under scrutiny alongside growing criticism of Big Tech’s content moderation decisions.

The case involving Google zeroes in on whether it can be sued because of its subsidiary YouTube’s algorithmic promotion of terrorist videos on its platform.

According to the plaintiffs in the case — the family of Nohemi Gonzalez, who was killed in a 2015 ISIS attack in Paris — YouTube’s targeted recommendations violated a US antiterrorism law by helping to radicalize viewers and promote ISIS’s worldview.

The allegation seeks to carve out content recommendations so that they do not receive protections under Section 230, potentially exposing tech platforms to more liability for how they run their services.

Google and other tech companies have said that interpretation of Section 230 would increase the legal risks associated with ranking, sorting and curating online content, a basic feature of the modern internet. Google has claimed that in such a scenario, websites would seek to play it safe by either removing far more content than is necessary, or by giving up on content moderation altogether and allowing even more harmful material on their platforms.

Friend-of-the-court filings by Craigslist, Microsoft, Yelp and others have suggested the stakes are not limited to algorithms and could also end up affecting virtually anything on the web that might be construed as making a recommendation. That might mean even average internet users who volunteer as moderators on various sites could face legal risks, according to a filing by Reddit and several volunteer Reddit moderators. Oregon Democratic Sen. Ron Wyden and former California Republican Rep. Chris Cox — the original co-authors of Section 230 — argued to the court that Congress’ intent in passing the law was to give websites broad discretion to moderate content as they saw fit.

The Biden administration has also weighed in on the case. In a brief filed in December, it argued Section 230 does protect Google and YouTube from lawsuits “for failing to remove third-party content, including the content it has recommended.” But, the government’s brief argued, those protections do not extend to Google’s algorithms because they represent the company’s own speech, not that of others.

The Supreme Court will hear oral arguments Wednesday on the second case, Twitter v. Taamneh, which will decide whether social media companies can be sued for aiding and abetting a specific act of international terrorism when the platforms have hosted user content that expresses general support for the group behind the violence without referring to the specific terrorist act in question.

Rulings in the cases are expected by the end of June.

Much of the momentum for changing Section 230 has fallen to the courts

From CNN's Brian Fung

The deadlock over Section 230 has thrown much of the momentum for changing the law to the courts — most notably, the US Supreme Court, which now has an opportunity this term to dictate how far the law extends.

Tech critics have called for added legal exposure and accountability.

“The massive social media industry has grown up largely shielded from the courts and the normal development of a body of law. It is highly irregular for a global industry that wields staggering influence to be protected from judicial inquiry,” the Anti-Defamation League wrote in a Supreme Court brief.

For the tech giants, and even for many of Big Tech’s fiercest competitors, it would be a bad thing, because it would undermine what has allowed the internet to flourish. It would potentially put many websites and users into unwitting and abrupt legal jeopardy, they say, and it would dramatically change how some websites operate in order to avoid liability.

The social media platform Reddit argued in a Supreme Court brief that if Section 230 is narrowed so its protections do not cover a site’s recommendations of content a user might enjoy, that would “dramatically expand Internet users’ potential to be sued for their online interactions.”

“‘Recommendations’ are the very thing that make Reddit a vibrant place,” wrote the company and several volunteer Reddit moderators. “It is users who upvote and downvote content, and thereby determine which posts gain prominence and which fade into obscurity.”

People would stop using Reddit, and moderators would stop volunteering, the brief argued, under a legal regime that “carries a serious risk of being sued for ‘recommending’ a defamatory or otherwise tortious post that was created by someone else.”

While this week’s oral arguments won’t be the end of the debate over Section 230, the outcome of the cases could lead to hugely significant changes the internet has never before seen — for better or for worse.

Section 230 has faced bipartisan criticism for years

From CNN's Brian Fung

Much of the criticism of Section 230 has come from conservatives who say that the law lets social media platforms suppress right-leaning views for political reasons.

By safeguarding platforms’ freedom to moderate content as they see fit, Section 230 does shield websites from lawsuits that might arise from that type of viewpoint-based content moderation, though social media companies have said they do not make content decisions based on ideology, but rather on violations of their policies.

The Trump administration tried to turn some of those criticisms into concrete policy that would have had significant consequences, if it had succeeded. For example, in 2020, the Justice Department released a legislative proposal for changes to Section 230 that would create an eligibility test for websites seeking the law’s protections. That same year, the White House issued an executive order calling on the Federal Communications Commission to interpret Section 230 in a more narrow way.

The executive order faced a number of legal and procedural problems, not least of which was the fact that the FCC is not part of the judicial branch, it does not regulate social media or content moderation decisions and it is an independent agency that, by law, does not take direction from the White House.

Even though the Trump-era efforts to curtail Section 230 never bore fruit, conservatives are still looking for opportunities to do so. And they aren’t alone. Since 2016, when social media platforms’ role in spreading Russian election disinformation broke open a national dialogue about the companies’ handling of toxic content, Democrats have increasingly railed against Section 230.

By safeguarding platforms’ freedom to moderate content as they see fit, Democrats have said, Section 230 has allowed websites to escape accountability for hosting hate speech and misinformation that others have recognized as objectionable but that social media companies can’t or won’t remove themselves.

The result is a bipartisan hatred for Section 230, even if the two parties cannot agree on why Section 230 is flawed or what policies might appropriately take its place.

“I would be prepared to make a bet that if we took a vote on a plain Section 230 repeal, it would clear this committee with virtually every vote,” said Rhode Island Democratic Sen. Sheldon Whitehouse at a hearing last week of the Senate Judiciary Committee. “The problem, where we bog down, is that we want 230-plus. We want to repeal 230 and then have ‘XYZ.’ And we don’t agree on what the ‘XYZ’ are.”

No comments:

Post a Comment