begin quote from:

Web results

Related searches

Autoencoder

| Part of a series on |

| Machine learning and data mining |

|---|

|

show Problems |

show Theory |

show Machine-learning venues |

show Related articles |

An autoencoder is a type of artificial neural network used to learn efficient data codings in an unsupervised manner.[1] The aim of an autoencoder is to learn a representation (encoding) for a set of data, typically for dimensionality reduction, by training the network to ignore signal “noise”. Along with the reduction side, a reconstructing side is learnt, where the autoencoder tries to generate from the reduced encoding a representation as close as possible to its original input, hence its name. Variants exist, aiming to force the learned representations to assume useful properties.[2] Examples are regularized autoencoders (Sparse, Denoising and Contractive), which are effective in learning representations for subsequent classification tasks,[3] and Variational autoencoders, with applications as generative models.[4] Autoencoders are applied to many problems, from facial recognition[5] to acquiring the semantic meaning of words.[6][7]

Introduction[edit]

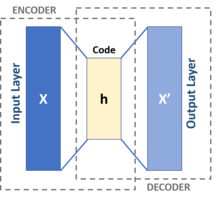

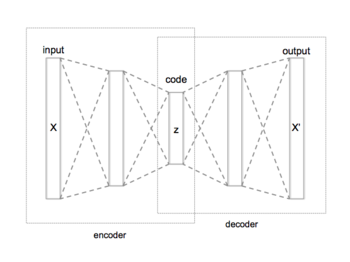

An autoencoder is a neural network that learns to copy its input to its output. It has an internal (hidden) layer that describes a code used to represent the input, and it is constituted by two main parts: an encoder that maps the input into the code, and a decoder that maps the code to a reconstruction of the input.

Performing the copying task perfectly would simply duplicate the signal, and this is why autoencoders usually are restricted in ways that force them to reconstruct the input approximately, preserving only the most relevant aspects of the data in the copy.

The idea of autoencoders has been popular in the field of neural networks for decades. The first applications date to the 1980s.[2][8][9] Their most traditional application was dimensionality reduction or feature learning, but the autoencoder concept became more widely used for learning generative models of data.[10][11] Some of the most powerful AIs in the 2010s involved sparse autoencoders stacked inside deep neural networks.[12]

Basic architecture[edit]

The simplest form of an autoencoder is a feedforward, non-recurrent neural network similar to single layer perceptrons that participate in multilayer perceptrons (MLP) – employing an input layer and an output layer connected by one or more hidden layers. The output layer has the same number of nodes (neurons) as the input layer. Its purpose is to reconstruct its inputs (minimizing the difference between the input and the output) instead of predicting a target value

An autoencoder consists of two parts, the encoder and the decoder, which can be defined as transitions

In the simplest case, given one hidden layer, the encoder stage of an autoencoder takes the input

This image

where

Autoencoders are trained to minimise reconstruction errors (such as squared errors), often referred to as the "loss":

where

As mentioned before, the training of an autoencoder is performed through backpropagation of the error, just like a regular feedforward neural network.

Should the feature space

Variations[edit]

Regularized autoencoders[edit]

Various techniques exist to prevent autoencoders from learning the identity function and to improve their ability to capture important information and learn richer representations.

Sparse autoencoder (SAE)[edit]

When representations are learned in a way that encourages sparsity, improved performance is obtained on classification tasks.[14] Sparse autoencoder may include more (rather than fewer) hidden units than inputs, but only a small number of the hidden units are allowed to be active at the same time.[12] This sparsity constraint forces the model to respond to the unique statistical features of the training data.

Specifically, a sparse autoencoder is an autoencoder whose training criterion involves a sparsity penalty

Recalling that

This sparsity can be achieved by formulating the penalty terms in different ways.

- One way is to exploit the Kullback-Leibler (KL) divergence.[14][15][16][17] Let

be the average activation of the hidden unit

![{\displaystyle \sum _{j=1}^{s}KL(\rho ||{\hat {\rho _{j}}})=\sum _{j=1}^{s}\left[\rho \log {\frac {\rho }{\hat {\rho _{j}}}}+(1-\rho )\log {\frac {1-\rho }{1-{\hat {\rho _{j}}}}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/93bdf538fae80657148ec8b5f919daf16d3ecb5b)

- Another way to achieve sparsity is by applying L1 or L2 regularization terms on the activation, scaled by a certain parameter

.[18] For instance, in the case of L1 the loss function becomes

- A further proposed strategy to force sparsity is to manually zero all but the strongest hidden unit activations (k-sparse autoencoder).[19] The k-sparse autoencoder is based on a linear autoencoder (i.e. with linear activation function) and tied weights. The identification of the strongest activations can be achieved by sorting the activities and keeping only the first k values, or by using ReLU hidden units with thresholds that are adaptively adjusted until the k largest activities are identified. This selection acts like the previously mentioned regularization terms in that it prevents the model from reconstructing the input using too many neurons.[19]

Denoising autoencoder (DAE)[edit]

Denoising autoencoders (DAE) try to achieve a good representation by changing the reconstruction criterion.[2]

Indeed, DAEs take a partially corrupted input and are trained to recover the original undistorted input. In practice, the objective of denoising autoencoders is that of cleaning the corrupted input, or denoising. Two assumptions are inherent to this approach:

- Higher level representations are relatively stable and robust to the corruption of the input;

- To perform denoising well, the model needs to extract features that capture useful structure in the input distribution.[3]

In other words, denoising is advocated as a training criterion for learning to extract useful features that will constitute better higher level representations of the input.[3]

The training process of a DAE works as follows:

- The initial input

is corrupted into

through stochastic mapping

.

- The corrupted input

is then mapped to a hidden representation with the same process of the standard autoencoder,

.

- From the hidden representation the model reconstructs

.[3]

The model's parameters

The above-mentioned training process could be applied with any kind of corruption process. Some examples might be additive isotropic Gaussian noise, Masking noise (a fraction of the input chosen at random for each example is forced to 0) or Salt-and-pepper noise (a fraction of the input chosen at random for each example is set to its minimum or maximum value with uniform probability).[3]

The corruption of the input is performed only during training. Once the model has learnt the optimal parameters, in order to extract the representations from the original data no corruption is added.

Contractive autoencoder (CAE)[edit]

The contractive autoencoder adds an explicit regularizer in its objective function that forces the model to learn an encoding robust to slight variations of input values. This regularizer corresponds to the Frobenius norm of the Jacobian matrix of the encoder activations with respect to the input. Since the penalty is applied to training examples only, this term forces the model to learn useful information about the training distribution. The final objective function has the following form:

The autoencoder is termed contractive because CAE is encouraged to map a neighborhood of input points to a smaller neighborhood of output points.[2]

DAE is connected to CAE: in the limit of small Gaussian input noise, DAEs make the reconstruction function resist small but finite-sized input perturbations, while CAEs make the extracted features resist infinitesimal input perturbations.

Variational autoencoder (VAE)[edit]

It has been suggested that this section be split out into another article titled Variational autoencoder. (Discuss) (May 2020) |

Variational autoencoders (VAEs) are generative models, akin to generative adversarial networks.[20] Their association with this group of models derives mainly from the architectural affinity with the basic autoencoder (the final training objective has an encoder and a decoder), but their mathematical formulation differs significantly.[21] VAEs are directed probabilistic graphical models (DPGM) whose posterior is approximated by a neural network, forming an autoencoder-like architecture.[20][22] Unlike discriminative modeling that aims to learn a predictor given observation, generative modeling tries to learn how the data is generated, and to reflect the underlying causal relations. Causal relations have the potential for generalizability.[4]

Variational autoencoder models make strong assumptions concerning the distribution of latent variables. They use a variational approach for latent representation learning, which results in an additional loss component and a specific estimator for the training algorithm called the Stochastic Gradient Variational Bayes (SGVB) estimator.[10] It assumes that the data is generated by a directed graphical model

Here,

Commonly, the shape of the variational and the likelihood distributions are chosen such that they are factorized Gaussians:

where

VAE have been criticized because they generate blurry images.[24] However, researchers employing this model were showing only the mean of the distributions,

.

These samples were shown to be overly noisy due to the choice of a factorized Gaussian distribution.[24][25] Employing a Gaussian distribution with a full covariance matrix,

could solve this issue, but is computationally intractable and numerically unstable, as it requires estimating a covariance matrix from a single data sample. However, later research[24][25] showed that a restricted approach where the inverse matrix

Large-scale VAE models have been developed in different domains to represent data in a compact probabilistic latent space. For example, VQ-VAE[26] for image generation and Optimus [27] for language modeling.

Advantages of depth[edit]

Autoencoders are often trained with a single layer encoder and a single layer decoder, but using deep (many-layered) encoders and decoders offers many advantages.[2]

- Depth can exponentially reduce the computational cost of representing some functions.[2]

- Depth can exponentially decrease the amount of training data needed to learn some functions.[2]

- Experimentally, deep autoencoders yield better compression compared to shallow or linear autoencoders.[28]

Training[edit]

Geoffrey Hinton developed a technique for training many-layered deep autoencoders. His method involves treating each neighbouring set of two layers as a restricted Boltzmann machine so that pretraining approximates a good solution, then using backpropagation to fine-tune the results.[28] This model takes the name of deep belief network.

Researchers have debated whether joint training (i.e. training the whole architecture together with a single global reconstruction objective to optimize) would be better for deep auto-encoders.[29] A 2015 study showed that joint training learns better data models along with more representative features for classification as compared to the layerwise method.[29] However, their experiments showed that the success of joint training depends heavily on the regularization strategies adopted.[29][30]

Applications[edit]

The two main applications of autoencoders are dimensionality reduction and information retrieval,[2] but modern variations were proven successful when applied to different tasks.

Dimensionality reduction[edit]

Dimensionality reduction was one of the first deep learning applications, and one of the early motivations to study autoencoders.[2] The objective is to find a proper projection method that maps data from high feature space to low feature space.[2]

One milestone paper on the subject was Hinton's 2006 paper:[28] in that study, he pretrained a multi-layer autoencoder with a stack of RBMs and then used their weights to initialize a deep autoencoder with gradually smaller hidden layers until hitting a bottleneck of 30 neurons. The resulting 30 dimensions of the code yielded a smaller reconstruction error compared to the first 30 components of a principal component analysis (PCA), and learned a representation that was qualitatively easier to interpret, clearly separating data clusters.[2][28]

Representing data in a lower-dimensional space can improve performance on tasks such as classification.[2] Indeed, many forms of dimensionality reduction place semantically related examples near each other,[32] aiding generalization.

Principal component analysis[edit]

If linear activations are used, or only a single sigmoid hidden layer, then the optimal solution to an autoencoder is strongly related to principal component analysis (PCA).[33][34] The weights of an autoencoder with a single hidden layer of size

However, the potential of autoencoders resides in their non-linearity, allowing the model to learn more powerful generalizations compared to PCA, and to reconstruct the input with significantly lower information loss.[28]

Information retrieval[edit]

Information retrieval benefits particularly from dimensionality reduction in that search can become more efficient in certain kinds of low dimensional spaces. Autoencoders were indeed applied to semantic hashing, proposed by Salakhutdinov and Hinton in 2007.[32] By training the algorithm to produce a low-dimensional binary code, all database entries could be stored in a hash table mapping binary code vectors to entries. This table would then support information retrieval by returning all entries with the same binary code as the query, or slightly less similar entries by flipping some bits from the query encoding.

Anomaly detection[edit]

Another application for autoencoders is anomaly detection.[36][37][38][39] By learning to replicate the most salient features in the training data under some of the constraints described previously, the model is encouraged to learn to precisely reproduce the most frequently observed characteristics. When facing anomalies, the model should worsen its reconstruction performance. In most cases, only data with normal instances are used to train the autoencoder; in others, the frequency of anomalies is small compared to the observation set so that its contribution to the learned representation could be ignored. After training, the autoencoder will accurately reconstruct "normal" data, while failing to do so with unfamiliar anomalous data.[37] Reconstruction error (the error between the original data and its low dimensional reconstruction) is used as an anomaly score to detect anomalies.[37]

Recent literature has however shown that certain autoencoding models can, counterintuitively, be very good at reconstructing anomalous examples and consequently not able to reliably perform anomaly detection.[40][41]

Image processing[edit]

The characteristics of autoencoders are useful in image processing.

One example can be found in lossy image compression, where autoencoders outperformed other approaches and proved competitive against JPEG 2000.[42][43]

Another useful application of autoencoders in image preprocessing is image denoising.[44][45][46]

Autoencoders found use in more demanding contexts such as medical imaging where they have been used for image denoising[47] as well as super-resolution[48][49] In image-assisted diagnosis, experiments have applied autoencoders for breast cancer detection[50] and for modelling the relation between the cognitive decline of Alzheimer's Disease and the latent features of an autoencoder trained with MRI.[51]

Drug discovery[edit]

In 2019 molecules generated with variational autoencoders were validated experimentally in mice.[52][53]

Popularity prediction[edit]

Recently, a stacked autoencoder framework produced promising results in predicting popularity of social media posts,[54] which is helpful for online advertising strategies.

Machine Translation[edit]

Autoencoder has been applied to machine translation, which is usually referred to as neural machine translation (NMT).[55][56] In NMT, texts are treated as sequences to be encoded into the learning procedure, while on the decoder side the target languages are generated. Language-specific autoencoders incorporate linguistic features into the learning procedure, such as Chinese decomposition features.[57]

![{\displaystyle {\hat {\rho _{j}}}={\frac {1}{m}}\sum _{i=1}^{m}[h_{j}(x_{i})]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/582c2f9744cfcb64919ae703ac67aaed149972c4)

No comments:

Post a Comment